Every day, we casually scroll through our Hackernoon, Twitter, Reddit or Facebook feeds without ever seeing the “hidden hand“ of our algorithmic overlords that are recommending us news, promotions and custom-tailored content on various topics of interest. For example, Facebook puts more posts from your friends and family on your feed while other platform focus more on relevance of a post.

These social media algorithms have a lot of power in shaping our intuition about what is happening out there in the world, but can sometimes be unfair, biased or exploited, mostly to end-users’ disadvantage. We are not going to talk about ethics of such algorithms here, but rather describe the basic technical idea and challenges behind it. So let’s explore what it takes and how would we go about it?

Social Media Algorithmic Arbiters

A social media feed is comprised of the content its users are creating. All posts go to one giant global queue from where they are delivered to users’ feeds accordingly. Thousands of such posts will compete with one another to rise in the eyes of our algorithmic arbiter.

Once the algorithm has made its decision, only a few of those will make it to our news feed. This decision-making is called scoring and it’s taking certain parameters into consideration.

There are a lot of ways by which one can score a post depending on its textual, visual or audio content. Some posts can include videos or images for example requiring machine learning to distinguish NSFW or spam content from a normal post, but that is a different topic.

When it comes to quantity – the more users there are on one platform, the more content there is to score, filter and match. We need fast, natural way of keeping users’ feed relevant to them.

The challenge here is to make a scalable and efficient scoring algorithm that makes sense to the end user and delivers organic and authentic content.

In a perfect world, this would be done in a transparent and standardized manner, but these rules are usually hidden behind complicated metrics.

Inventory

Let’s imagine a simple post and it’s structure, so we can examine it’s properties and pick the most relevant parameters for our purpose.

Here is an example structure that we can use to model our scoring formula:

POST

- ID: 0,

- TYPE: “text” // this can be video, image, text – in this case let’s just take text

- COUNTRY: “DE”

- CREATION_DATE: “1234556789” // date and time of creation

- POINTS: 502 // points represent “likes“ or “upvotes“

- FLAGS: “appropriate” // this is a flag by a ML model

- CONTENT: “ … “

- …

We want our ranking algorithm to be GEO aware. This means that the primary feed of each user is his local country/language-based stream. These local streams are sometimes called buckets.

Further, we want to take some basic parameters from the post. Two key parameters are time of post creation and number of points. Time of creation is useful since we want to make feed chronological – showing from newest to oldest, going from top down. Number of points or likes informs us about user engagement, but this could be any other parameter of your choosing that you have access to or that is relevant for your use-case. In this example we will use number of points as a viral indicator.

Third parameter that we need to introduce is a general control parameter that we can use to tweak manually and do global overrides using something called gravity.

Social Media Algorithm Scoring Function

Here is what a scoring function would look like:

Score = (P-1) / (T+2)^G

where,

P = points of a post (-1 is just to negate submitter vote on it’s own post)

T = time since submission (in hours – this is important)

G = gravity

Effects of Gravity and Time on the Algorithm

Gravity and time have a significant impact on the score. Generally, these things should hold true:

- Score decreases as (T) time passes, meaning that older posts will get lower and lower scores

- Score decreases much faster for older posts if (G) gravity is increased

Simulating the Algorithm

It’s much easier to understand if we plot the algorithm visually. We can use Wolfram Alpha to make this plot and tweak it – you can do it live here.

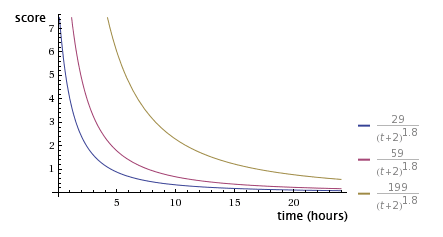

Score over time

Using the formula above, in a span of 24 hours with 3 different posts, the plot shows us that the yellow one which has the most points (199) falls down the users feed much more slowly. Other posts start decaying much faster, but this is balanced by the time parameter in the formula, which gives us the nice curve that makes decay smooth.

As you can see the score decreases a lot as time goes by, for example, a 24-hour old post will have a very low score regardless of how many points it got making space for new posts and keeping the posts flowing through the feed in a natural way.

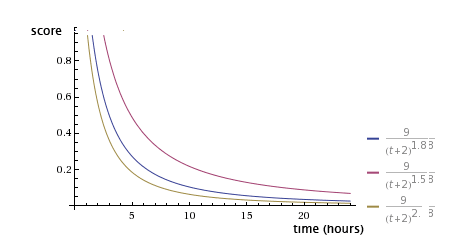

Gravity over time

Here we tweaked only the gravity, while keeping the number of points the same for all 3 posts. As you can see on the graph the score decreases a lot faster the larger the gravity is. The more gravity a post has – the faster it falls down the user’s feed.

Strengths of the Algorithm

- Simple and scalable formula.

- Feed is local, but delivery is global.

- Works well when we want to highlight/push new stuff globally or locally.

- Supports manual override and post-intervention.

- No discrimination/bias in inventory or data.

- Prevents propagation of unpopular or unwanted posts, by decaying them using gravity, leaving more space for the targeted and orchestrated feed source

Weaknesses of the Algorithm

- Wall-clock hours ‘penalize’ a post even if no one is reading (overnight, for example). A time denominated in ticks of actual activity might address this.

- A post that misses its audience first time through, perhaps due to bad headline, may never recover, even with a later flurry of points far beyond what new submissions are getting.

Conclusion: Social Media Algorithms

Despite its weaknesses, this formula can be further developed and built on top to overcome them, but even in its simplest form it shows how using just a couple of parameters can sometimes be enough to steer and serve a large amount of content in a natural, organic way.

No algorithm is perfect, a good one is always evolving.